My favorite movie of all time is “2001: A Space Odyssey.”

And my favorite character is the computer HAL 9000.

In the future (now past) of the movie, HAL is paradoxically the most human personality. Tasked with running the day-to-day operations of a spaceship, HAL becomes strained to the breaking point when he’s given a command to lie about the mission’s true objectives. He ends up having a psychotic break and killing most of the people he was supposed to protect.

It’s heartbreaking finally when Dave Bowman slowly turns off the higher functions of HAL’s brain and the supercomputer regresses in intelligence while singing “A Bicycle Built for Two” – one of the first things he was programmed to do.

I’m gonna’ be honest here – I cry like a baby at that point.

But once I clean up my face and blow my nose, I realize this is science fiction – emphasis on the fiction.

I am well aware that today’s calendar reads 2020, yet our efforts at artificial intelligence are not nearly as advanced as HAL and may never be.

That hasn’t stopped supposedly serious publications like Education Week – “The American Education News Site of Record” – from continuously pretending HAL is right around the corner and ready to take over my classroom.

What’s worse, this isn’t fear mongering – beware the coming robo-apocalypse. It’s an invitation!

A few days ago, the on-line periodical published an article called “Teachers, the Robots Are Coming. But That’s Not a Bad Thing” by Kevin Bushweller.

It was truly one of the dumbest things I’ve read in a long time.

Bushweller, an assistant managing editor at Education Week and Executive Editor at both the Ed Tech Leader and Ed Week’s Market Brief, seems to think it is inevitable that robots will replace classroom teachers.

This is especially true for educators he describes as “chronically low-performing.”

And we all know what he means by that!

These are teachers whose students get low scores on high stakes standardized tests.

Which students are these? Mostly poor and minority children.

These are kids without all the advantages of wealth and class, kids with fewer books in the home and fewer native English speakers as role models, kids suffering from food, housing and healthcare insecurity, kids navigating the immigration system and fearing they or someone they love could be deported, kids faced with institutional racism, kids who’ve lost parents, friends and family to the for-profit prison industry and the inequitable justice system.

And what does our society do to help these kids catch up with their more privileged peers? It underfunds their schools, subjects them to increased segregation, narrows their curriculum, offers them as prey to charter school charlatans – in short, it adds to their hurtles more than removes them.

So “chronically low-performing” teachers would be those who can’t overcome all these obstacles for their students by just teaching more good.

I can’t imagine why such educators can’t get the same results as their colleagues who teach richer, whiter kids without all these issues. It’s almost like teachers can’t do it all, themselves, — and the solution? Robots.

But I’m getting ahead of myself.

Bushweller suggests we fire all the human beings who work in the most impoverished and segregated schools and replace them… with an army of robots.

Yeah.

Seriously.

Black and brown kids won’t get interactions with real adult human beings. Instead they can connect with the ed tech version of Siri programmed to drill and kill every aspect of the federally mandated standardized test.

Shakespeare’s Miranda famously exclaimed:

“O brave new world, That has such people in’t!”

But the future envisioned by technophiles like Bushweller has NO such people in’t – only robots ensuring the school-to-prison pipeline remains intact for generations to come.

In such a techo-utopia, there will be two tiers of education. The rich will get human teachers and the poor and minorities will get Bluetooth connected voice services like Alexa.

But when people like me complain, Bushweller gas lights us away as being narrow-minded.

He says:

“It makes sense that teachers might think that machines would be even worse than bad human educators. And just the idea of a human teacher being replaced by a robot is likely too much for many of us, and especially educators, to believe at this point.”

The solution, he says, isn’t to resist being replaced but to actually help train our mechanistic successors:

“…educators should not be putting their heads in the sand and hoping they never get replaced by an AI-powered robot. They need to play a big role in the development of these technologies so that whatever is produced is ethical and unbiased, improves student learning, and helps teachers spend more time inspiring students, building strong relationships with them, and focusing on the priorities that matter most. If designed with educator input, these technologies could free up teachers to do what they do best: inspire students to learn and coach them along the way.”

To me this sounds very similar to a corporate drone rhapsodizing on the merits of downsizing: Sure your job is being sent overseas, but you get to train your replacement!

Forgive me if I am not sufficiently grateful for that privilege.

Maybe I should be relieved that he at least admits robots may not be able to replace EVERYTHING teachers do. At least, not yet. In the meantime, he expects robots could become co-teachers or effective tools in the classroom to improve student learning by taking over administrative tasks, grading, and classroom management.

And this is the kind of nonsense teachers often get from administrators who’ve fallen under the spell of the Next Big Thing – iPads, software packages, data management systems, etc.

However, classroom teachers know the truth. This stuff is more often than not overhyped bells and whistles. It’s stuff that CAN be used to improve learning but rarely with more clarity and efficiency than the way we’re already doing it. And the use of edtech opens up so many dangers to students – loss of privacy, susceptibility to being data mined, exposure to unsafe and untried programs, unscrupulous advertising, etc.

Bushweller cites a plethora of examples of how robots are used in other parts of the world to improve learning that are of just this type – gimmicky and shallow.

It reminds me of IBM’s Watson computing system that in 2011 famously beat Ken Jennings and Brad Rutter, some of the best players, at the game show Jeopardy.

What is overhyped bullcrap, Alex?

Now that Watson has been applied to the medical field diagnosing cancer patients, doctors are seeing that the emperor has no clothes. Its diagnoses have been dangerous and incorrect – for instance recommending medication that can cause increased bleeding to a hypothetical patient who already suffered from intense bleeding.

Do we really want to apply the same kind of artificial intelligence to children’s learning?

AI will never be able to replace human beings. They can only displace us.

What I mean by that is this: We can put an AI system in the same position as a human being but it will never be of the same high quality.

It is a displacement, a disruption, but not an authentic replacement of equal or greater value.

In his paper “The Rhetoric and Reality of Anthropomorphism in Artificial Intelligence,” David Watson explains why.

Watson (no relation to IBM’s supercomputer) of the Oxford Internet Institute and the Alan Touring Institute, writes that AI do not think in the same way humans do – if what they do can even accurately be described as thinking at all.

These are algorithms, not minds. They are sets of rules not contemplations.

An algorithm of a smile would specify which muscles to move and when. But it wouldn’t be anything a live human being would mistake for an authentic expression of a person’s emotion. At best it would be a parabola, at worst a rictus.

In his recent paper in Minds and Machines, Watson outlines three main ways deep neural networks (DNNs) like the ones we’re considering here “think” and “learn” differently from humans.

1) DNN’s are easily fooled. While both humans and AIs can recognize things like a picture of an apple, computers are much more easily led astray. Computers are more likely to misconstrue part of the background and foreground, for instance, while human beings naturally comprehend this difference. As a result, humans are less distracted by background noise.

2) DNN’s need much more information to learn than human beings. People need relatively fewer examples of a concept like “apple” to be able to recognize one. DNN’s need thousands of examples to be able to do the same thing. Human toddlers demonstrate a much easier capacity for learning than the most advanced AI.

3) DNN’s are much more focused on details and less on the bigger picture. For example, a DNN could successfully label a picture of Diane Ravitch as a woman, a historian, and an author. However, switching the position of her mouth and one of her eyes could end up improving the confidence of the DNN’s prediction. The computer wouldn’t see anything wrong with the image though to human eyes there definitely was something glaring incorrect.

“It would be a mistake to say that these algorithms recreate human intelligence,” Watson says. “Instead, they introduce some new mode of inference that outperforms us in some ways and falls short in others.”

Obviously the technology may improve and change, but it seems more likely that AI’s will always be different. In fact, that’s kind of what we want from them – to outperform human minds in some ways.

However, the gap between humanity and AI should never be glossed over.

I think that’s what technophiles like Bushweller are doing when they suggest robots could adequately replace teachers. Robots will never do that. They can only be tools.

For instance, only the most lonely people frequently have long conversations with SIRI or Alexa. After all, we know there is no one else really there. These wireless Internet voice services are just a trick – an illusion of another person. We turn to them for information but not friendship.

The same with teachers. Most of the time, we WANT to be taught by a real human person. If we fear judgment, we may want to look up discrete facts on a device. But if we want guidance, encouragement, direction or feedback, we need a person. AI’s can imitate such things but never as well as the real thing.

So we can displace teachers with these subpar imitations. But once the novelty wears off – and it does – we’re left with a lower quality instructor and a subpar education.

The computer HAL is not real. To borrow a phrase from science fiction author Philip K. Dick, Artificial intelligence is not yet “more human than human.”

Maybe it never will be.

The problem is not narrow minded teachers unwilling to sacrifice their jobs for some nebulous techno-utopia. The problem is market based solutions that ignore the human cost of steam rolling over educators and students for the sake of profits.

As a society, we must commit ourselves to a renewed ethic of humanity. We must value people more than things.

And that includes a commitment to never even attempting to forgo human teachers as guides for the most precious things in our lives – our children.

“Algorithms are not ‘just like us’… by anthropomorphizing a statistical model, we implicitly grant it a degree of agency that not only overstates its true abilities, but robs us of our own autonomy… It is always humans who choose whether or not to abdicate this authority, to empower some piece of technology to intervene on our behalf. It would be a mistake to presume that this transfer of authority involves a simultaneous absolution of responsibility. It does not.”

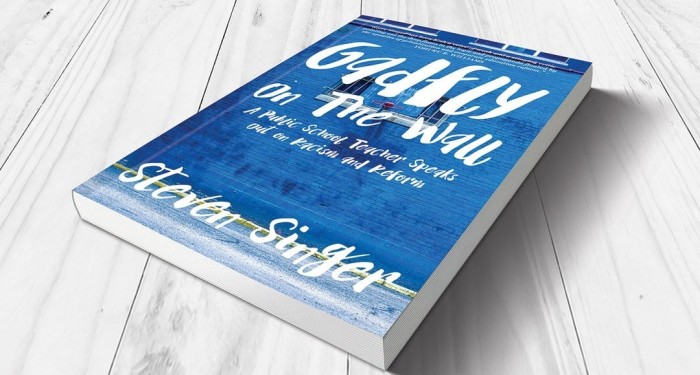

Like this post? I’ve written a book, “Gadfly on the Wall: A Public School Teacher Speaks Out on Racism and Reform,” now available from Garn Press. Ten percent of the proceeds go to the Badass Teachers Association. Check it out!

thanks for your excellent analysis. Steve kolber gave a short 10 minute presentation on the automation of teaching. THe quality of video is not so good, but content is excellent – https://youtu.be/f8oJe9EgLPw

LikeLike

Thanks for commenting, George. The video you linked to is over an hour long though. Perhaps you had a different one in mind. I’m generally not a flipped classroom fan, either, but the realities in my urban western Pennsylvania district may be very different from Australia.

LikeLike

sorry it is just the first 10 minutes of that video

LikeLike

Thank you, Steven. Here is my blog post from several years ago on the misguided and misanthropic thinking that goes into online instruction as a magic elixir for “teaching/learning.” https://resseger.wordpress.com/…/27/story-telling-species/

LikeLike

oops–Sorry. Here’s the correct link, I hope: https://resseger.wordpress.com/2016/05/27/story-telling-species/

LikeLike

[…] And tightening our student privacy laws, will not solve everything. […]

LikeLike

[…] need to be in the presence of physical human beings in a real environment with their peers to maximize their […]

LikeLike

[…] get me wrong. My heart is with them. I fear that, too. But it’s a war we have to wage later. Just like the […]

LikeLike

[…] They’re walking with teachers but looking around at the first thing possible to replace them. […]

LikeLike

[…] They’re walking with teachers but looking around at the first thing possible to replace them. […]

LikeLike

[…] They’re walking with teachers but looking around at the first thing possible to replace them. […]

LikeLike

[…] They’re walking with teachers but looking around at the first thing possible to replace them. […]

LikeLike

[…] They are strolling with instructors but seeking close to at the 1st detail probable to replace them. […]

LikeLike